AI Code Enhancement

Starting from the version of VB Decompiler 12.6, you can improve the quality of decompiled C# code using artificial intelligence. This feature transforms decompiled code obtained from IL disassembly into more readable and structured form. To achieve this, you'll need a third-party AI model aggregator called Ollama, which is distributed completely free of charge (as of the time of writing this section).

Step 1: Installing Ollama

If you don't have Ollama installed yet:

1. Go to the official website: https://ollama.com/download2. Download the Windows installer.

3. Run it and follow the installation instructions.

4. After installation, restart your system to apply the updated Path environment variables.

5. Make sure that Ollama is running (you should see its icon in the system tray).

Note: By default, Ollama runs at the address http://localhost:11434.

Step 2: Downloading the qwen3:8b Model

1. Open Command Prompt or PowerShell.

2. Run the following command: ollama pull qwen3:8b

This may take some time depending on your internet speed.

Once downloaded, the model will be available for use within Ollama.

System Requirements for Ollama and the qwen3:8b Model

To run Ollama and the qwen3:8b model, you’ll need relatively powerful hardware as the model is quite resource-intensive:

Minimum Requirements:CPU: Intel i3-7100 or equivalent AMD processor with AVX2 instruction set support

RAM: 16 GB

NVIDIA GPU: RTX 2060 with 8 GB VRAM

Disk Space: 10 GB

OS: Windows 10 or later (64-bit)

Recommended Requirements:

CPU: Intel i7-7700 / Ryzen 7 or higher with AVX2 instruction set support

RAM: 64 GB

NVIDIA GPU: RTX 4060 with 16 GB VRAM

Disk Space: SSD, 15 GB or more

OS: Windows 11 (64-bit)

The qwen3:8b model can consume up to 10–12 GB of RAM during operation.

Step 3: Configuring VB Decompiler

Now open VB Decompiler and proceed to use the AI-assisted code improvement feature.

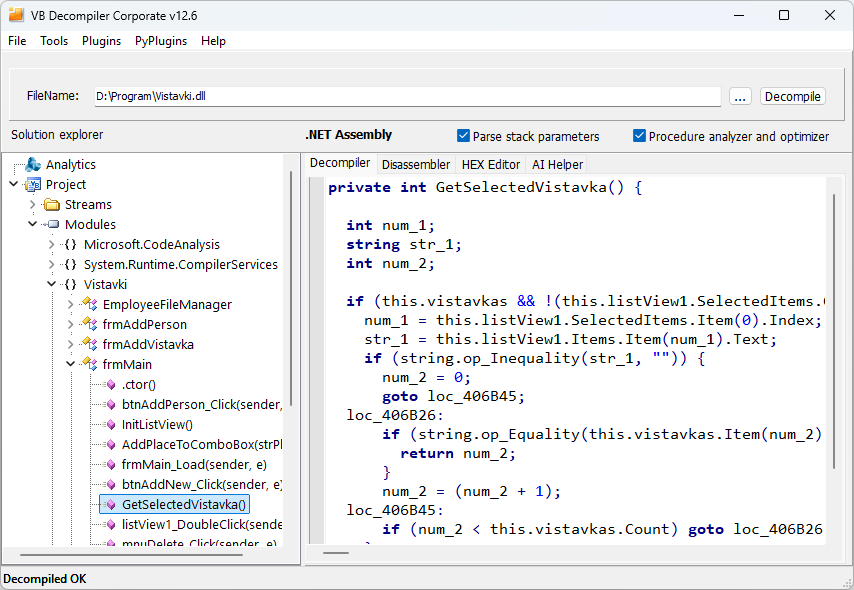

On one side of the window, there's an object tree where you can select the function you want to decompile.On the other side, there's a panel with tabs:

Decompiler – displays the decompiled code.

AI Helper – where the code is improved using AI.

Step 4: Connecting to Ollama

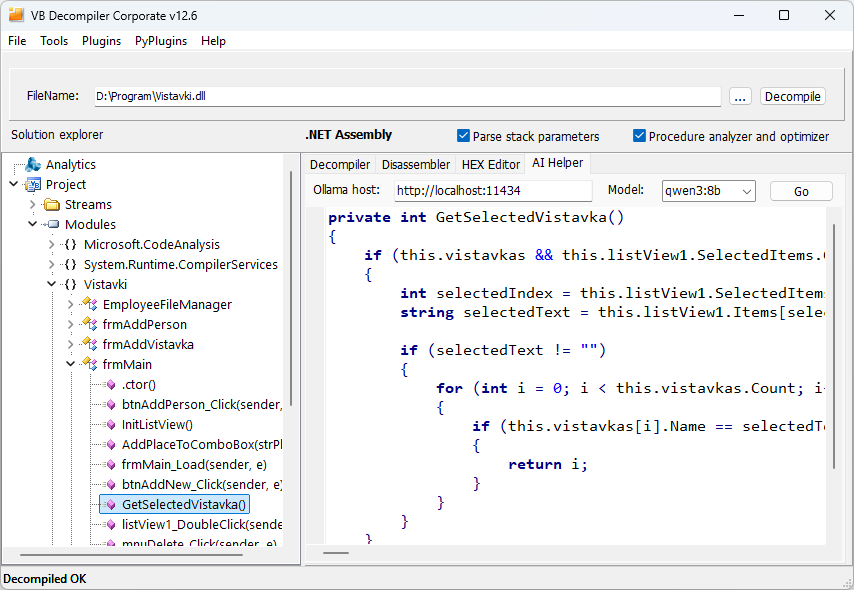

Switch to the AI Helper tab.

In the Ollama host field, enter the address where the Ollama server is running. By default: http://localhost:11434

In the Model dropdown list, choose the model (we strongly recommend using this specific model): qwen3:8b

Step 5: Enhancing Code with AI

Click the Go button.

The program will send the current decompiled code to the Ollama model and receive an improved version.

The result will be displayed in the text field below the Go button.

What Does the AI Improve?

- Recognizes and restores constructs such as for, foreach, while, do...while.

- Converts goto into structured logic like if...else.

- Replaces numeric values with known constants (e.g., 0x20000000 → FileAccess.Read).

- Simplifies complex conditions and expressions.

- Improves code readability through reformatting and renaming variables (when possible).

Possible Issues and Tips

- Ensure Ollama is running and accessible at the specified address.

- If the model doesn’t appear in the list, verify that it was properly downloaded via ollama pull.

- If processing takes too long, try using lighter models.

- For best results, use the qwen3:8b model.

Important Notice

Ollama is a third-party product developed by an independent team. The author of VB Decompiler assumes no responsibility for the correctness of Ollama’s operation, the quality of model outputs, their availability, or the results of AI-based code improvements.

Conclusion

The AI-assisted code enhancement feature significantly improves the analysis of compiled applications. Thanks to local execution via Ollama, you maintain data privacy and full control over the analysis process.